With Patience and Dedication

Introduction to Sicilian NLP

natural language

table of contents

- introduction

- machine translation

- parallel text

- subword splitting

- low-resource NMT

- multilingual translation

- reverse training strategy

Sicilian language

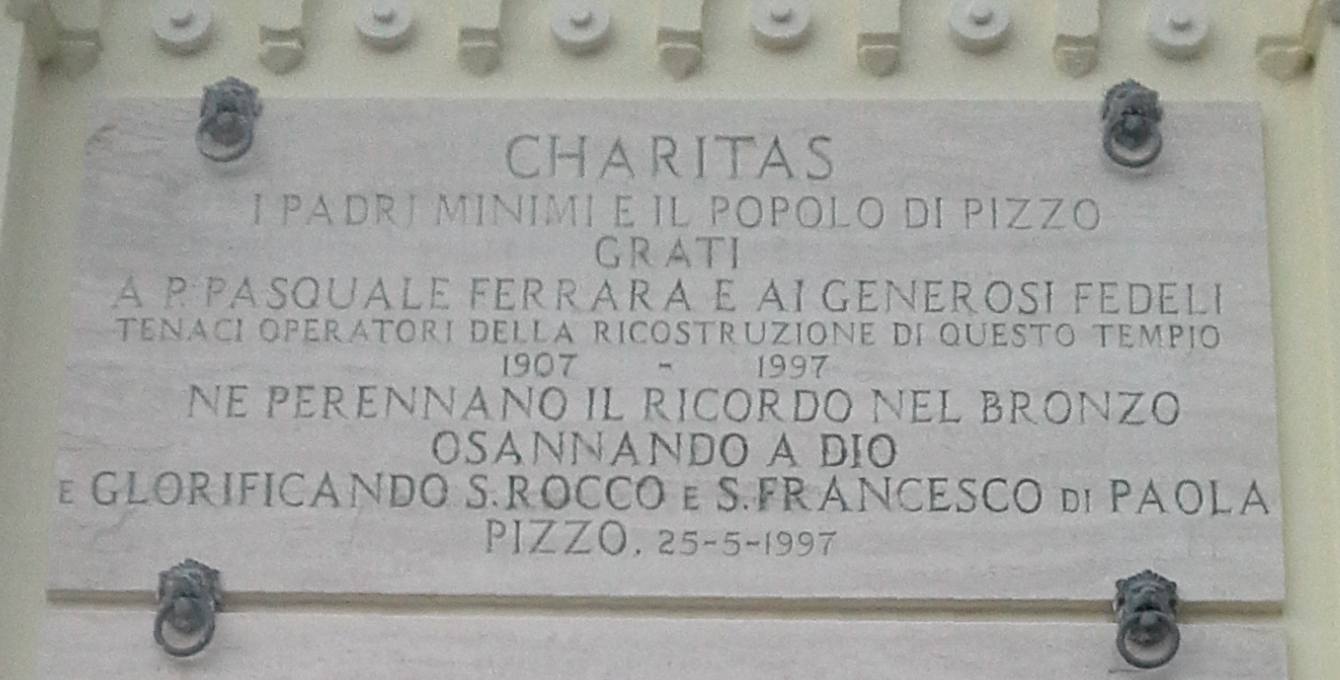

In 1905, an earthquake killed hundreds of people and destroyed the Church of Saint Rocco and Saint Francis of Paola.

Two years later, the people of Pizzo Calabro carried bricks in a procession to the site and ceremonially began to rebuild the church. Then they spent the next 90 years rebuilding their church.

The people who vowed to rebuild the church knew that they would never see its completion. But they knew that their grandchildren would. And they knew that their descendents would celebrate baptisms, funerals and marriages in the church hundreds of years later. So they rebuilt it.

With patience and dedication to a clear long-term vision, you can create amazing things.

So I've been steadily assembling a corpus of parallel text to create a machine translator for the Sicilian language. It now translates simple sentences fairly well. With a little more work, we will soon have a good-quality translator.

And I hope this work helps other people develop neural machine translators for low-resource languages.

Sicilian is a good case study for several reasons. First, the language has been continuously recorded since the Sicilian School of Poets joined the imperial court of Frederick II in the 13th century.

And in our times, Arba Sicula has spent the past 40 years translating Sicilian literature into English (among its numerous activities to promote the Sicilian language).

In the course of their work with the many dialects of Sicilian, they also established a "Standard Sicilian," which is what has enabled us to create a high-quality corpus of Sicilian-English parallel text.

High-quality parallel text is the necessary ingredient in any neural machine translation project. And recent advances in the field have made it possible to develop neural machine translators with limited amounts of parallel text.

In our initial experiments, we achieved a BLEU score of 25.1 on English-to-Sicilian translation and 29.1 on Sicilian-to-English with just 16,945 translated sentence pairs containing 266,514 Sicilian words and 269,153 English words.

That's a good result for a small amount of parallel text. Then we added more parallel text.

We opened grammar books and translated all of the homework exercises. That alone will gave us thousands of examples. Then with a basic translator, we back-translated monolingual text to create another few thousand, which further improved translation quality.

As a general matter, you can always find ways to assemble more parallel text.

And parallel text in another language can help too. For reasons explained on the multilingual translation and reverse training strategy pages, adding Italian-English data greatly improved our translation quality. It pushed our BLEU scores up to 45.1 on the English-to-Sicilian side and to 48.6 on Sicilian-to-English.

The traditional recommendation for languages without any parallel text has been to create a rules-based translator, using a framework like Apertium. But rules are difficult to write and, after a certain point, writing more rules won't improve translation quality very much.

But you can always find ways to create more parallel text. With patience and dedication, you can assemble tens of thousands of sentence pairs. Then assemble ten thousand more to further improve translation quality.

So I have prepared these notes in the hope that they will help bring neural machine translation to low-resource languages.

The machine translation page explains what neural machine translation is and how it differs from previous approaches. The parallel text page discusses recent progress in the field and the dataset that we're developing. The subword splitting page describes the method of splitting words to subword units, which reduces the vocabulary size and makes low-resource neural machine translation possible.

And the low-resource NMT page describes the model and training methods that we used to develop a translator for the Sicilian language. Using high dropout parameters to train a small Transformer model on parallel text with a small subword vocabulary yields relatively good translation quality (as measured by BLEU score) with limited amounts of parallel text.

So we have good reason to be optimistic about the potential to bring neural machine translation to low-resource languages.

As I develop these notes, I will also include information about how to align sentences with hunalign and how to set up a the Sockeye toolkit. In the meantime, I have appended some background information on how the machine learns how to translate. The word context and word embeddings pages provide a simpler example of how neural networks identify context from a sequence.

And at Napizia, you can follow our progress in developing un Tradutturi Sicilianu.

Copyright © 2002-2025 Eryk Wdowiak